Machine Learning (ML) is an integral part of everything we do at Wayfair to support each of the 30 million active customers on our website. It enables us to make context-aware, real-time, and intelligent decisions across every aspect of our business. We use ML models to forecast product demand across the globe, ensuring our customers can quickly access what they’re looking for. Natural language processing (NLP) models are used to analyze chat messages on our website so customers can be redirected to the appropriate customer support team as quickly as possible, without having to wait for a human assistant to become available. Wayfair’s commitment to MLOps Acceleration is evident in every aspect of our operations.

Integrating Machine Learning at Wayfair

- Machine learning integration at Wayfair enhances customer experiences through personalized recommendations and tailored product suggestions.

- Advanced algorithms analyze vast amounts of data to predict customer preferences and behaviors, enabling targeted marketing strategies.

- Machine learning models optimize inventory management, forecasting demand, and minimizing stockouts, ensuring products are readily available for customers.

- Predictive analytics inform pricing strategies, promotional campaigns, and inventory allocation, maximizing revenue and profitability.

- Wayfair’s commitment to integrating machine learning drives innovation, efficiency, and competitiveness in the e-commerce landscape.

Leveraging ML for Enhanced Customer Support

- By leveraging machine learning for enhanced customer support, businesses can achieve MLOps Acceleration, ensuring efficient and personalized assistance.

- Machine learning algorithms analyze customer interactions to identify patterns, anticipate needs, and deliver proactive support solutions.

- Automated chatbots powered by machine learning provide immediate responses to customer inquiries, reducing wait times and improving satisfaction.

- Sentiment analysis tools analyze customer feedback to identify areas for improvement and enhance overall support experiences.

- Machine learning enables predictive maintenance of support systems, ensuring uninterrupted service and minimizing downtime for customers.

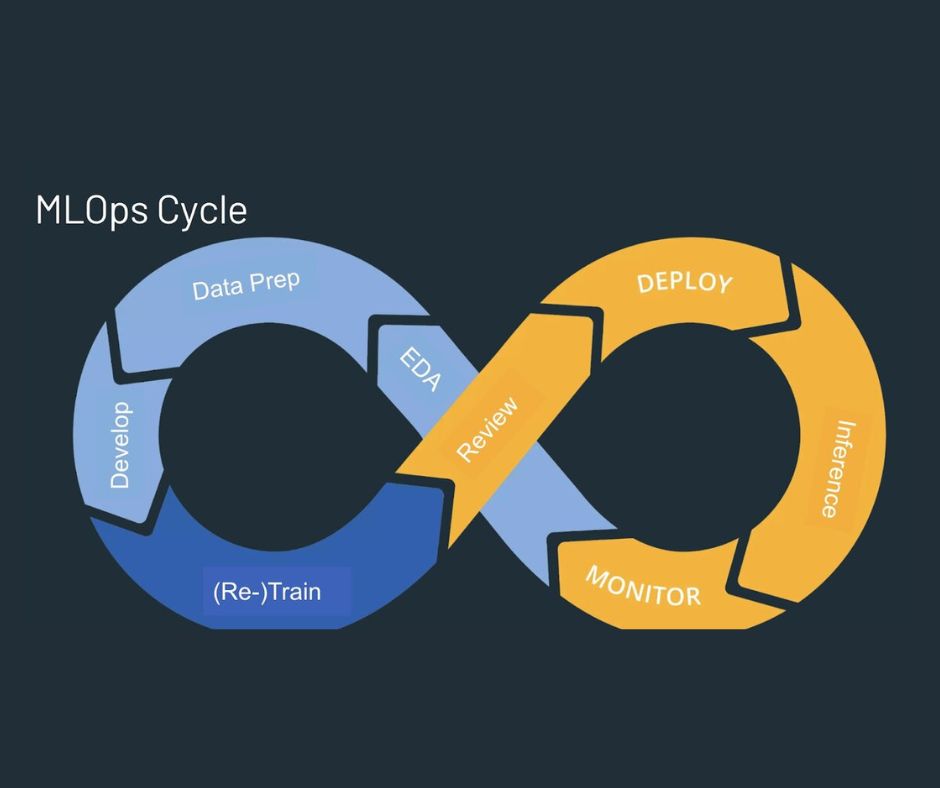

Commitment to MLOps Acceleration

- Wayfair’s commitment to MLOps Acceleration drives innovation and efficiency in machine learning operations.

- Implementing MLOps Acceleration strategies enhances the scalability and reliability of machine learning systems.

- MLOps Acceleration ensures seamless integration of machine learning models into production environments, optimizing performance and agility.

- By prioritizing MLOps Acceleration, Wayfair accelerates the development and deployment of machine learning solutions to meet evolving business needs.

- Continuous improvement initiatives focus on MLOps Acceleration, fostering a culture of innovation and agility within the organization.

Technology Strategy for Competitiveness with MLOps Acceleration

In today’s fast-paced business landscape, implementing a robust technology strategy is crucial for maintaining competitiveness. Leveraging MLOps Acceleration, organizations can stay ahead by optimizing their machine learning operations. This strategic approach ensures agility, scalability, and efficiency in deploying machine learning models. By embracing MLOps Acceleration, businesses can accelerate innovation cycles and quickly adapt to changing market demands. With a focus on technology strategy and MLOps Acceleration, organizations can unlock new opportunities for growth and success. Stay competitive in the digital age by prioritizing MLOps Acceleration as a cornerstone of your technology strategy.

Evolution of Infrastructure and Tools with MLOps Acceleration

Experience the transformation in infrastructure and tools propelled by MLOps Acceleration. With a focus on efficiency and scalability, MLOps Acceleration revolutionizes the way organizations deploy and manage their machine learning operations. Embrace cutting-edge technologies and streamlined processes to enhance agility and performance. From advanced data pipelines to automated model deployment, MLOps Acceleration paves the way for rapid innovation and seamless integration. Stay ahead of the curve by embracing the evolution of infrastructure and tools with MLOps Acceleration as your guiding force. Unlock the full potential of your machine learning initiatives and drive competitive advantage in today’s dynamic digital landscape.

Adoption of Vertex AI for ML

The adoption of Google Cloud’s Vertex AI in 2021 showcases Wayfair’s readiness to embrace new solutions for ML advancements.

- Vertex AI streamlines machine learning (ML) workflows, enabling seamless adoption and deployment of ML models.

- With Vertex AI, organizations can accelerate model development and deployment, reducing time-to-market and enhancing productivity.

- Advanced features such as AutoML capabilities and unified model management simplify the ML lifecycle, from data preparation to model evaluation.

- Vertex AI offers scalability and flexibility, allowing organizations to efficiently manage ML workloads of any size and complexity.

- By adopting Vertex AI, businesses can leverage Google Cloud’s powerful infrastructure and industry-leading AI technologies to drive innovation.

- Experience the benefits of streamlined ML adoption with Vertex AI, empowering your organization to unlock new insights and drive business growth.

One AI Platform with all the ML Tools Needed

This enables us to build software that runs on any infrastructure. Additionally, we enjoyed how the tool looks, feels, and operates. Consequently, within six months, we moved from configuring our infrastructure manually to conducting a POC, to a first production release.

Next on our priority list was to use Vertex AI Feature Store to serve and use AI technologies as ML features in real-time, or in batch with a single line of code. Moreover, Vertex AI Feature Store fully manages and scales its underlying infrastructure, such as storage and compute resources. Consequently, our data scientists can now focus on feature computation logic, instead of worrying about the challenges of storing features for offline and online usage.

While our data scientists are proficient in building and training models, they are less comfortable setting up the infrastructure and bringing the models to production. Additionally, when we embarked on an MLOps transformation, it was important for us to enable data scientists to leverage a platform as seamlessly as possible without having to know all about its underlying infrastructure. Consequently, to that end, our goal was to build an abstraction on Vertex AI. Moreover, our simple python-based library interacts with the Vertex AI Pipeline and Vertex AI Features Store. Subsequently, a typical data scientist can leverage this setup without having to know how Vertex AI works in the backend. Therefore, that’s the vision we’re marching towards–and we’ve already started to notice its benefits.

Reducing Hyperparameter Tuning From Two Weeks to Under one Hour

While we enjoy using open source tools such as Apache Airflow, however, the way we were using it was creating issues for our data scientists. Moreover, we frequently ran into infrastructure challenges, carried over from our legacy technologies, such as support issues and failed jobs. Consequently, we built a CI/CD pipeline using Vertex AI Pipelines, based on Kubeflow, to remove the complexity of model maintenance.

Now everything is well arranged, documented, scalable, easy to test, and well organized in terms of best practices. Additionally, this incentivizes people to adopt a new standardized way of working, which in turn brings its own benefits. For instance, one example that illustrates this is hyperparameter tuning, an essential part of controlling the behavior of a machine learning model.

In machine learning, hyperparameter tuning or optimization is the problem of choosing a set of optimal hyperparameters for a learning algorithm. A hyperparameter is a parameter whose value is used to control the learning process. Every machine learning model will have a different hyperparameter, whose value is set before the learning process begins. And a good choice of hyperparameters can make an algorithm perform optimally.

Doing it in Python using a legacy infrastructure would take a data scientist on average two weeks. We have over 100 data scientists at Wayfair, so standardizing this practice and making it more efficient was a priority for us.

With a standardized way of working on Vertex AI, all our data scientists can now leverage our code to access CI/CD, monitoring, and analytics out-of-the-box to conduct hyperparameter tuning in just one day.

Powering Great Customer Experiences with More ML-Based Functionalities

Next, we’re working on a docker container template that will enable data scientists to deploy a running ‘hello world’ Vertex AI pipeline. It can take a data science team more than two months to get a ML model fully operational on average. With Vertex AI, we expect to cut down that time to two weeks. Like most of the things we do, this will have a direct impact on our customer experience.

It’s important to remember that some ML models are more complex than others. It must be accurate, and it must appear on-screen extremely quickly while customers browse the website. That means these models have the highest requirements and are the most difficult to publish to production.

We’re actively working on building and implementing tools to streamline and enable continuous monitoring of our data and models in production, which we want to integrate with Vertex AI. We believe in the power of AutoML to build models faster, so our goal is to evaluate all these services in GCP and then find a way to leverage them internally.

And it’s already clear that the new ways of working enabled by Vertex AI not only make the lives of our data scientists easier, but also have a ripple effect that directly impacts the experience of millions of shoppers who visit our website daily. They’re all experiencing better technology and more functionalities, faster.

For a more detailed dive on how our data scientists are using Vertex AI, look for part two of this blog coming soon.